DETAILED PROJECTS

IGNOTO VIBRATIONS XR SCULPTURE 2022

CLIENT:RIO ART MUSEUM (MAR), GOETHE INSTITUTE

POSITION:XR DIRECTOR AND CREATOR

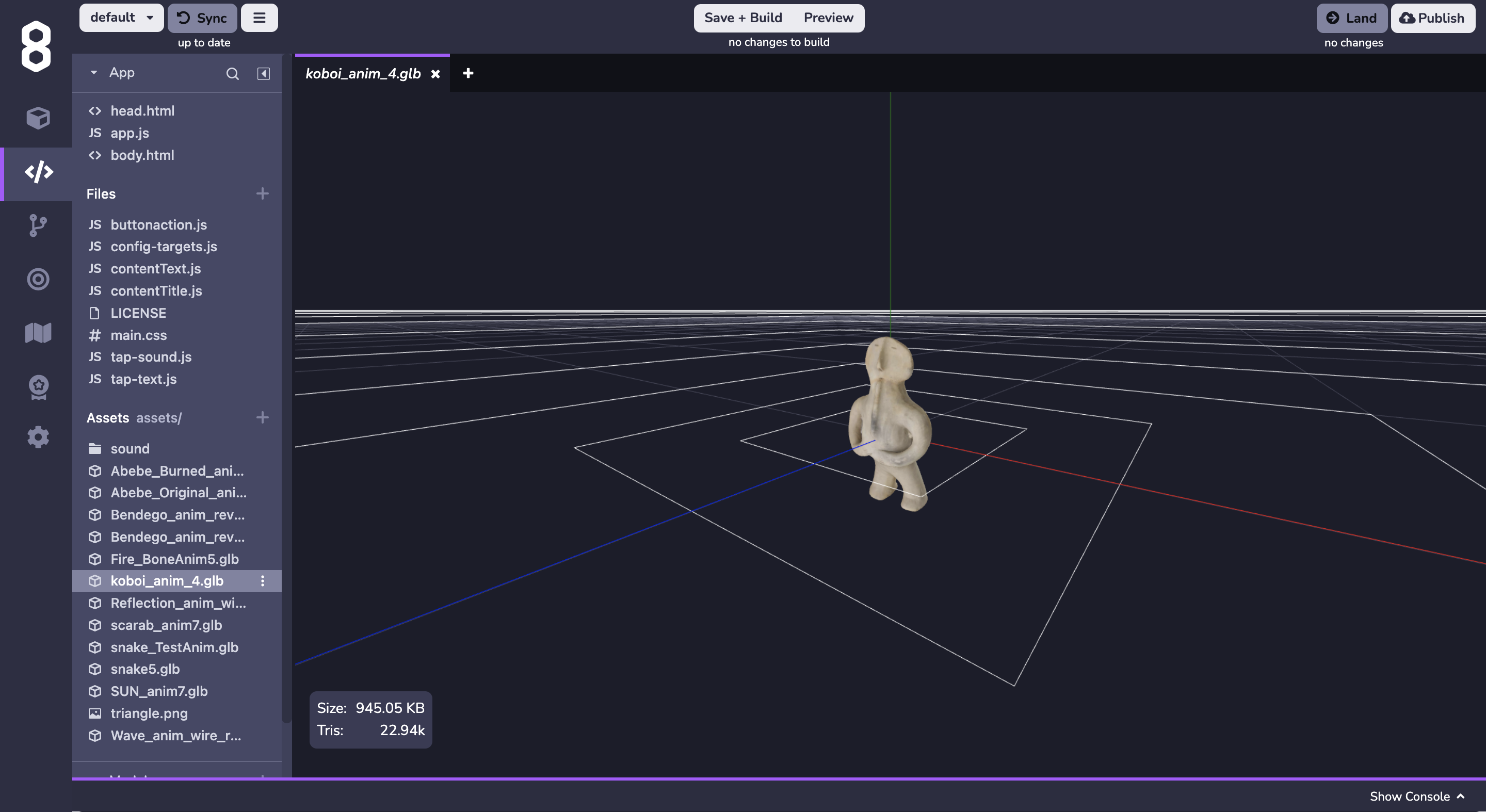

TOOLS: 8TH WALL, BLENDER, MIRO

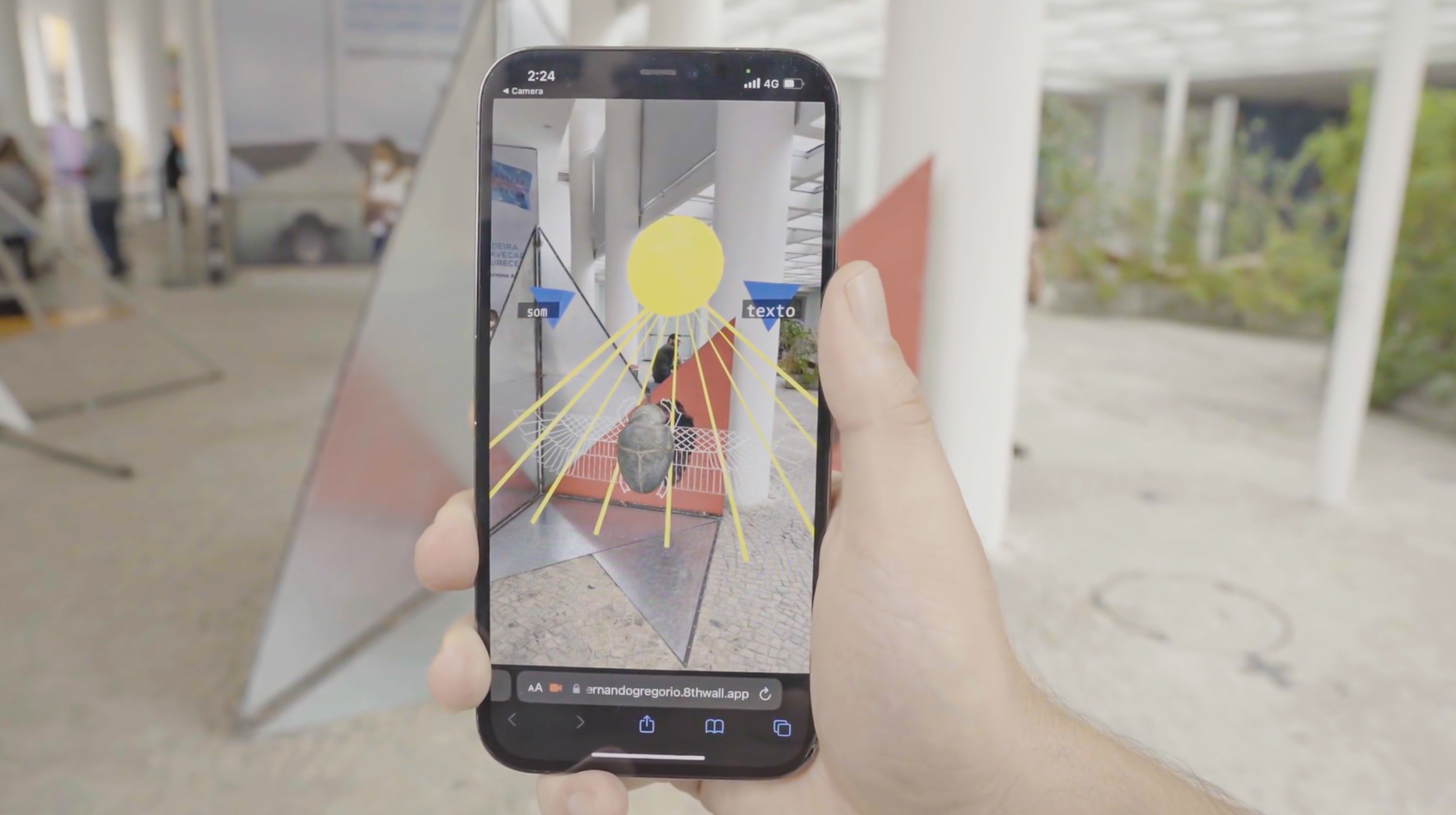

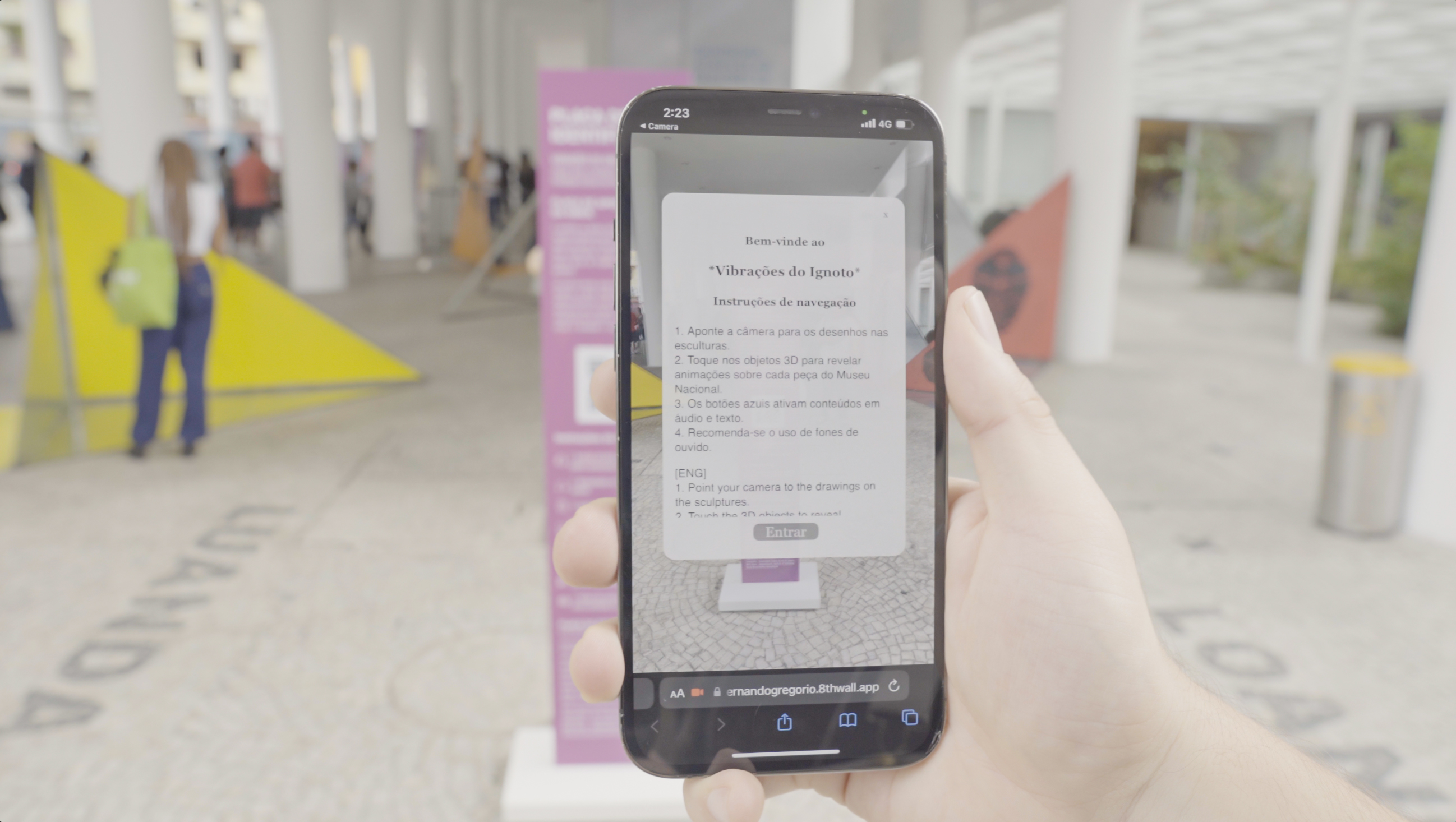

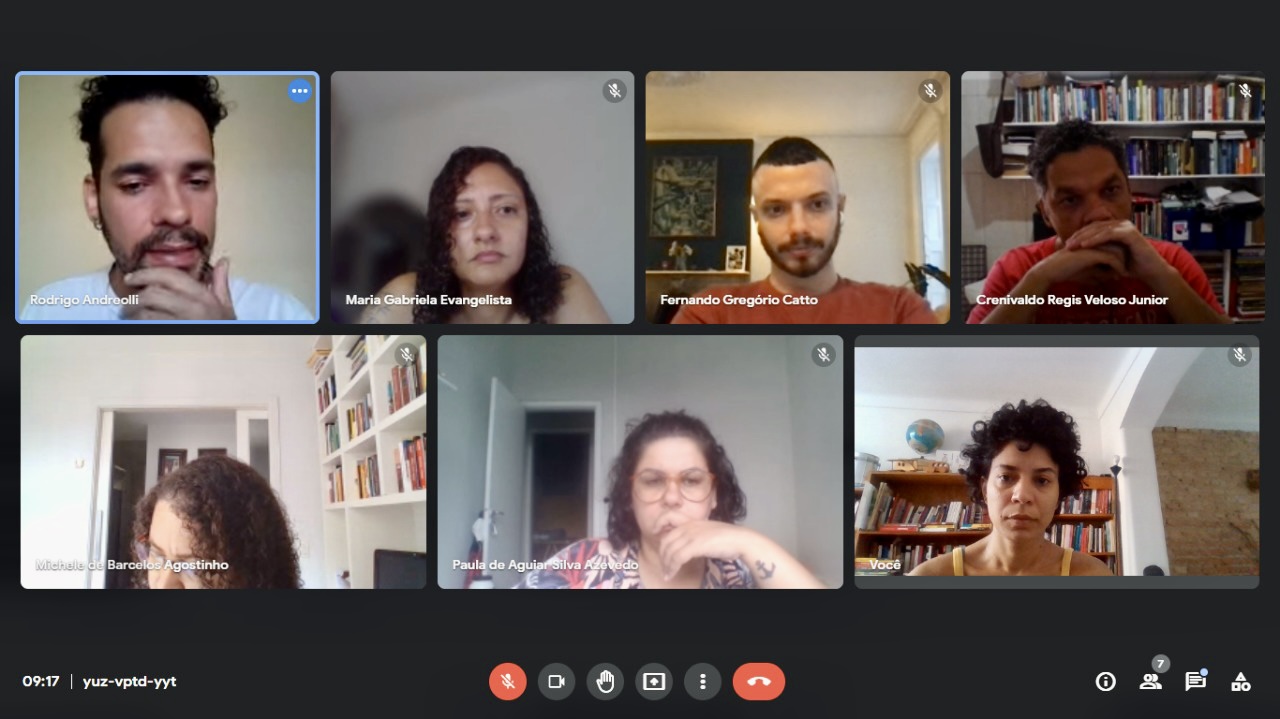

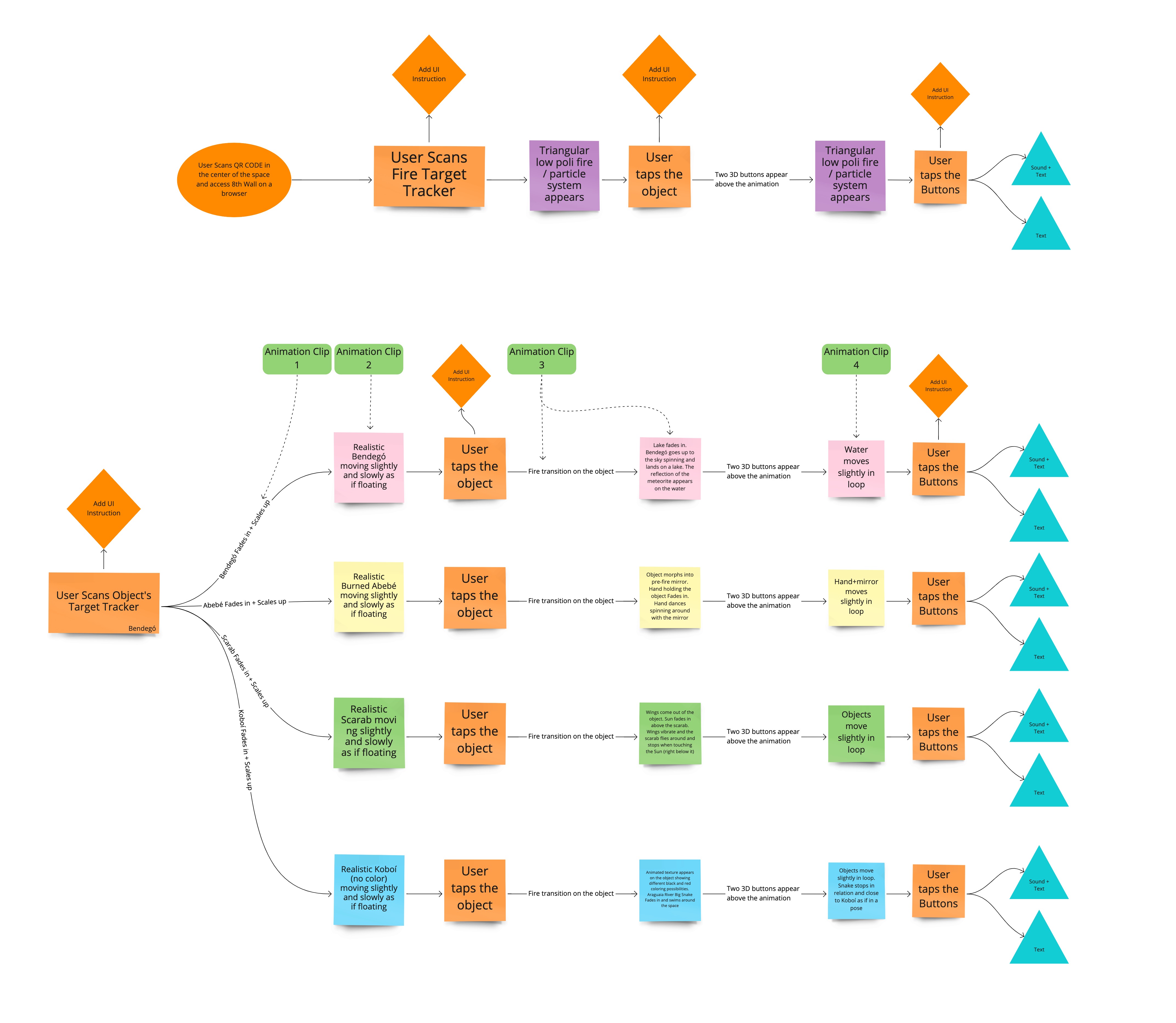

Ignoto Vibrations is a mixed-reality sculpture series prototype and performance commissioned for the Museum Conference at Rio Art Museum (MAR) in Brazil. I co-created the project in collaboration with the artist Rodrigo Andreolli and the architect Marilia Piraju. As an XR director, I coordinated an international team of tech professionals (AR developer, 3D modeler, and animator) and designed the user experience.

The fire at the National Museum of Rio de Janeiro on September 2, 2018, created images loaded with symbolic force about the treatment conditions given to Brazil's scientific and cultural research. This fire caused the loss of the most important museum collections in the country's history, but at the same time made us reflect on the weight of certain historical narratives, urging us to trace new memories that re-exist beyond the destroyed matter. Fire, in its tragic manifestation, also reaches its transcendental aspect.

Based on the study of narratives brought by researchers and members of the National Museum collection rescue team, this project proposes a prototype exhibition in Mixed Reality, motivated by 3D scans of some of the recovered pieces and the memories lost in the fire.

CREATION PROCESS

CREDITS

Research Direction, Creation, Production: Rodrigo Andreolli

Mixed Reality Director, Creation: Fernando Gregório

Creation, Scenic Architecture: Marília Pirajú

Augmented Reality Development: Name Atchareeya Jattuporn

Animation and 3D Modeling: Gabriel Brasil

Texts, Drawings and Performance:

Bruxa Profana Latino-americana

Clamor

Fernando Gregorio

João Vitor Cavalcante

Moonlight Mendez

Marília Piraju

Rodrigo Andreolli

Vick Nefertiti

Musicians

André Souza

Kaio Ventura

Design, sound editing and audiovisual recording

Renato Pascoal

Illustrations

Nash Laila

Vincent

Assistant

Valerie Chen

Locksmith

Maurício - Fina Serralheria - Bixiga, São Paulo

Consultancy

Mother Celina de Xango

Thanks

Antonia Cattan

Carolina Bonfim

Clelio de Paula

Fel Barros

Flavia Meirelles

Gustavo Caboco

Henrique Entratice

Lucas Canavarro

Luiz Cruz

Maria Catarina Duncan

Renato Caldas

Rosabelli Coelho-Keyssar

Research Direction, Creation, Production: Rodrigo Andreolli

Mixed Reality Director, Creation: Fernando Gregório

Creation, Scenic Architecture: Marília Pirajú

Augmented Reality Development: Name Atchareeya Jattuporn

Animation and 3D Modeling: Gabriel Brasil

Texts, Drawings and Performance:

Bruxa Profana Latino-americana

Clamor

Fernando Gregorio

João Vitor Cavalcante

Moonlight Mendez

Marília Piraju

Rodrigo Andreolli

Vick Nefertiti

Musicians

André Souza

Kaio Ventura

Design, sound editing and audiovisual recording

Renato Pascoal

Illustrations

Nash Laila

Vincent

Assistant

Valerie Chen

Locksmith

Maurício - Fina Serralheria - Bixiga, São Paulo

Consultancy

Mother Celina de Xango

Thanks

Antonia Cattan

Carolina Bonfim

Clelio de Paula

Fel Barros

Flavia Meirelles

Gustavo Caboco

Henrique Entratice

Lucas Canavarro

Luiz Cruz

Maria Catarina Duncan

Renato Caldas

Rosabelli Coelho-Keyssar

Collaboration

Goethe Institut Rio de Janeiro

Fernanda Galvão, Julian Fuchs, Robin Mallick

National Museum of Rio de Janeiro

Crenivaldo Veloso Jr., João Pacheco de Oliveira, Maria Elizabeth Zucolotto, Maria Gabriela Evangelista, Juliana Sayão, Michele de Barcelos Agostinho, Paula de Aguiar Silva Azevedo, Pedro Luis Von Seehausen, Rafael de Andrade, Sergio Alex Kugland de Azevedo, Silvia Reis

LAPID - Image and Signal Processing Laboratory - UFRJ - Federal University of Rio de Janeiro

Bendegó and Heart Scarab 3D Models provided by LAPID

3D Abebé de Oxum and Ritxokó models created by Rodrigo Andreolli and Gabriel Brasil from images by Pedro Von Seehausen

Support

MISTI Brazil - Massachusetts Institute of Technology

Rio Art Museum

Teatro Oficina

Universidade Antropófaga

Goethe Institut Rio de Janeiro

Fernanda Galvão, Julian Fuchs, Robin Mallick

National Museum of Rio de Janeiro

Crenivaldo Veloso Jr., João Pacheco de Oliveira, Maria Elizabeth Zucolotto, Maria Gabriela Evangelista, Juliana Sayão, Michele de Barcelos Agostinho, Paula de Aguiar Silva Azevedo, Pedro Luis Von Seehausen, Rafael de Andrade, Sergio Alex Kugland de Azevedo, Silvia Reis

LAPID - Image and Signal Processing Laboratory - UFRJ - Federal University of Rio de Janeiro

Bendegó and Heart Scarab 3D Models provided by LAPID

3D Abebé de Oxum and Ritxokó models created by Rodrigo Andreolli and Gabriel Brasil from images by Pedro Von Seehausen

Support

MISTI Brazil - Massachusetts Institute of Technology

Rio Art Museum

Teatro Oficina

Universidade Antropófaga